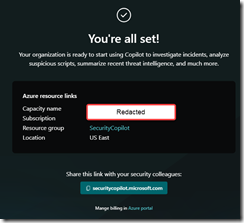

Beginning April 1st, 2024, you can now purchase and deploy Microsoft Security Copilot. The setup process takes less than 5 minutes to complete.

I am going to share my experience of setting it up and some considerations for post installation.

Prerequisites

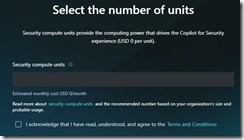

– An Azure Subscription

– Authority to spend at least $2,880 per month

(this is the minimum price to get started)

– Security Administrator or Global Administrator permissions

– At least one of the following technologies already deployed: Defender for Endpoint, Sentinel, Intune, Entra, or Purview.

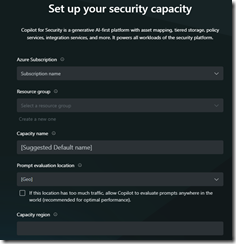

Installing Copilot for Security

https://securitycopilot.microsoft.com/tour/admin

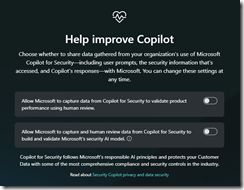

Here are the screen shots of the setup process:

Managing Security Copilot is then performed at: https://securitycopilot.microsoft.com

When you first sign-in, you are greeted with some adoption guidance:

You then pick a theme:

You are reminded that your information is protected.

You are then greeted.

You are then given a walkthrough. If you scroll down then you will see the prompt bar below the Chat window.

Don’t know what to type in? There is a button to click on with sample prompts.

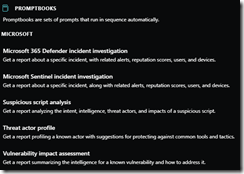

Here are some of the “Prompt Books” to try out:

Then there is a whole long list of “System Capabilities” which are based on the plug-ins that you have setup.

There are probably about 100 more to select from.

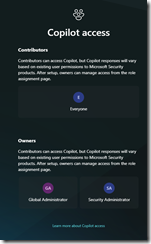

Role Based Access Control

Copilot for Security roles

Copilot for Security introduces two roles that function like access groups but aren’t Microsoft Entra ID roles. Instead, they are application roles inside Copilot.

- Copilot owner

- Copilot contributor

The following Microsoft Entra roles automatically inherit Copilot owner access, and these cannot be removed:

- Security Administrator

- Global Administrator

The following Microsoft Entra roles automatically inherit Copilot Contributor access, and these cannot be removed:

- Security Operator

- Security Reader

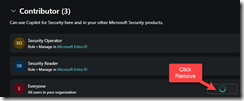

“By default, All users in the Microsoft Entra tenant are given Copilot contributor access.” Say what??

This can’t be right? Why would all users in your company need contributor rights to Copilot? The documentation page for Copilot for Security does not attempt to rationalize this default setting.

So the first thing to do is restrict who can create Copilot sessions, since the Security Compute Unit is not cheap and you want to control costs. Therefore, to customize the RBAC and remove the Everyone Group from having Contributor rights navigate to the Role Assignment page here:

https://securitycopilot.microsoft.com/role-assignment

Then expand contributor and click remove to the right of “Everyone.”

Or if you want to navigate to this page manually, here are the navigation instructions:

- Select the

home menu.

home menu.

- Select Role assignment > expand contributor and click remove next to “Everyone”

- Note: You can add B2B Guests or your MSSP access to administer or use Copilot.

Getting Started

I selected the “Microsoft Defender Incident Investigation” Prompt Book.

After typing in an Incident ID, I then ran the prompt book. The prompt book contained the following 7 prompts, but it also gave me an option to change or add prompts. When I clicked “Run” it then ran these prompts in sequence. I am noting the elapsed time that each prompt took to complete:

1. Summarize Defender incident <DEFENDER_INCIDENT_ID>. (21 Seconds elapsed)

2. Tell me about the entities associated with that incident. (22 seconds)

3. What are the reputation scores for the IPv4 addresses on that incident? (8 seconds)

4. Show the authentication methods setup for each user involved in that incident. Especially indicate whether they have MFA enabled. (36 seconds)

5. If a user is listed in the incident details, show which devices they have used recently and indicate whether they are compliant with policies. (25 seconds)

6.If any devices are listed in the previous output, show details from Intune on the one that checked in most recently. Especially indicate if it is current on all operating system updates. (19 seconds)

7. Write an executive report summarizing this investigation. It should be suited for a non-technical audience. (55 seconds)

So in total, it took just over 3 minutes to run the three prompts and produce the following executive report:

# Executive Summary of Security Incident

On April 1, 2024, a security incident of medium severity occurred involving a user named ‘John Doe’. The incident was triggered by multiple failed login attempts to the cloud application Microsoft 365.

## Incident Details

The incident was titled ‘Multiple failed user log on attempts to an app involving one user’. It was first detected at 15:45:38 UTC on April 1, 2024. As of now, the incident remains open and is yet to be classified.

## User Details

The user involved in this incident, ‘John Doe’, has multiple authentication methods set up, including password authentication, Microsoft Authenticator, and FIDO2 Authentication Method. This indicates that the user has Multi-Factor Authentication (MFA) enabled, adding an extra layer of security to their account.

## Device Details

The user ‘John Doe’ has been recently using a device named ‘device-name’. This device is a Microsoft Corporation Cloud PC Enterprise 8vCPU/32GB/512GB running Windows 10.0.22631.3296. The device is compliant with the policies, indicating that it meets the security standards set by the organization.

## Current Status

As of now, no investigation, remediation, or follow-up actions have been taken on this incident. The incident remains open and is being monitored for any further suspicious activities.

This report aims to provide a high-level overview of the incident. Further technical details can be provided if necessary.”

First Impressions

My first impression was the setup process was fast, simple and efficient. I had no prior training and was able to complete the setup in minutes. The guided walkthrough had me understanding and using promptbooks immediately.

My only criticism is that the prompt book question:

“What are the reputation scores for the IPv4 addresses on that incident?” was unable to find the actual IP addresses associated with the failed sign-in attempts. Even after I re-wrote the prompt to a more complete one as follows:

“What are the reputation scores for the IPv4 addresses on that incident? If no IPv4 addresses are associated with the incident, then lookup recent sign-ins to see if you can identify which sign-ins could be related to this incident. For example, if this incident had to do with multiple failed login attempts, then find failed sign-ins in the Entra or UAL Logs around the same time this incident was created”

The result on the 2nd attempt was:

”Unfortunately, there were no IPv4 addresses associated with the incident titled ‘Multiple failed user log on attempts to an app’ involving user ‘john doe’ and the cloud application Microsoft 365 that occurred at 2024-04-01 15:45:38 UTC.

Additionally, no recent sign-ins were found that could be related to this incident. This could be because the system automatically defaults to a 24-hour time lookup based on the provided or current date and time.

Please provide a specific time range which contains the desired results or consider rephrasing the request.”

This was not helpful, because the failed sign-ins were only 8 hours ago.

So my 3rd attempt at finding what IP address was associated with the failed logon attempts was to leave the Security Copilot page and go back to the M365 Defender XDR Portal (security.microsoft.com) and see if Copilot can help me write some KQL to find the IP and see if it is the user’s normal historical IP.

Here was the prompt I gave Copilot in the Advanced Hunting interface:

”find all failed logins from John Doe in the last 24 hours and determine if they are anomalous. for example, are they from his normal device ID or his normal IP addresses he has used in the past successful logins?

It generated KQL to find successful sign-ins from Devices and IP’s and then it compared that to unsuccessful sign-ins.

let successful_logins = IdentityLogonEvents | where AccountUpn == [email protected] and Timestamp > ago(24h) and FailureReason == "" | summarize make_set(DeviceId), make_set(IPAddress); IdentityLogonEvents | where AccountUpn == "[email protected]" and Timestamp > ago(24h) and FailureReason != "" | extend IsAnomalousDevice = iff(DeviceId in (successful_logins), false, true) | extend IsAnomalousIP = iff(IPAddress in (successful_logins), false, true) | project Timestamp, DeviceId, IPAddress, IsAnomalousDevice, IsAnomalousIP

It gave me the option to Run the Query, or add it to the Editor.

It failed from the start because DeviceID does not exist in the IdentityLogonEvents table. This table contains events from Microsoft Defender for Cloud Apps, as well as events from Microsoft Defender for Identity, but it does not capture the non interactive sign-ins from Microsoft Entra.

It should have used the AADSignInEventsBeta table which contains the interactive AadDeviceId column, and other valuable device details such as DeviceTrustType, IsManaged, IsCompliant, etc which are more helpful when trying to determine if failed sign-ins are concerning.

Note: The AADSignInEventsBeta requires EntraP2, which I have, but not all customers may have. I would expect Copilot to know I am licensed for this and suggest the best table to use. The documentation page for this table states that all this enhanced schema information will eventually move over to the IdentityLogonEvents table.

The query that ran gave this error:

Here is the updated query that uses DeviceID:

let

successful_logins = AADSignInEventsBeta

|

where AccountUpn =~ “John Doe” and Timestamp >

ago(7d) and ErrorCode == 0

|

summarize make_set(AadDeviceId), make_set(IPAddress);

AADSignInEventsBeta

|

where AccountUpn =~ “John Doe” and Timestamp >

ago(24h) and ErrorCode != 0

|

extend IsAnomalousDevice = iff(AadDeviceId in (successful_logins), false,

true)

|

extend IsAnomalousIP = iff(IPAddress in (successful_logins), false,

true)

|

project Timestamp , DeviceName , IPAddress, IsAnomalousDevice, IsAnomalousIP,

City

Monitoring Usage

It is important to learn how to monitor and manage the use of security compute units in Copilot for Security. You’ll want to keep an eye on usage of Copilot, to make sure you stay within the boundaries of what you are paying for. You can monitor usage on this reporting site here: https://securitycopilot.microsoft.com/usage-monitoring

It was surprising to me to see my casual exploration on a single security incident exceed the usage threshold of 1 SCU Unit.

I ran five total Copilot interactions over a 60 minute period (working on a single case and had it generate some KQL.)

Therefore, based on my experience, you would need to budget for 1 SCU Unit per SOC Analyst ($35k per SCU) if they will continuously use Copilot for Security. Otherwise the SOC analyst may get a notification in the middle of an investigation that they need to wait an hour, or contact the stingy admin to buy more SCU units.

Here is what the documentation page states:

”When an analyst is in the middle of an investigation and the usage is nearing the provisioned capacity limit, a notification is displayed to the analyst in response to a prompt… When the provisioned security compute unit is crossed, the analyst will see an error message stating that due to high usage in the organization Copilot can’t respond to requests. Analysts can’t submit additional prompts at this time. More capacity would become available in the next hour.”

It’s unfortunate that a SOC analyst in the middle of triaging an event may have to wait an hour before having enough capacity or making the business decide whether or not to pay an additional ~$35,000 per year for another SCU Compute unit.

Feature Request

I would like to see a feature where there is an emergency buffer that can be used for SOC analysts to ‘burst’ or ‘borrow’ from SCU compute times. Imagine a scenario where you have a single SOC Analyst working 8 to 5pm. Wouldn’t it be great if they could consume the SCU usage from after hours and get to use that horsepower during normal business hours? Otherwise those after hours SCU units would go to waste if that organization does not have an after hours SOC.